How To turn Your Deepseek Ai From Zero To Hero

본문

This demonstrates that the MMLU-Pro CS benchmark maintains a excessive ceiling and remains a precious tool for evaluating superior language models. This proves that the MMLU-Pro CS benchmark doesn't have a soft ceiling at 78%. If there's one, it'd moderately be around 95%, confirming that this benchmark remains a strong and efficient instrument for evaluating LLMs now and in the foreseeable future. While it is a a number of selection take a look at, as an alternative of 4 reply options like in its predecessor MMLU, there are now 10 choices per question, which drastically reduces the probability of right solutions by chance. Let’s test back in a while when models are getting 80% plus and we will ask ourselves how normal we expect they're. The former are generally overconfident about what might be predicted, and I feel overindex on overly simplistic conceptions of intelligence (which is why I find Michael Levin’s work so refreshing). Second, lots of the fashions underlying the API are very large, taking too much of expertise to develop and deploy and making them very expensive to run. Second, with native fashions running on client hardware, there are practical constraints around computation time - a single run already takes a number of hours with larger models, and that i typically conduct a minimum of two runs to make sure consistency.

This demonstrates that the MMLU-Pro CS benchmark maintains a excessive ceiling and remains a precious tool for evaluating superior language models. This proves that the MMLU-Pro CS benchmark doesn't have a soft ceiling at 78%. If there's one, it'd moderately be around 95%, confirming that this benchmark remains a strong and efficient instrument for evaluating LLMs now and in the foreseeable future. While it is a a number of selection take a look at, as an alternative of 4 reply options like in its predecessor MMLU, there are now 10 choices per question, which drastically reduces the probability of right solutions by chance. Let’s test back in a while when models are getting 80% plus and we will ask ourselves how normal we expect they're. The former are generally overconfident about what might be predicted, and I feel overindex on overly simplistic conceptions of intelligence (which is why I find Michael Levin’s work so refreshing). Second, lots of the fashions underlying the API are very large, taking too much of expertise to develop and deploy and making them very expensive to run. Second, with native fashions running on client hardware, there are practical constraints around computation time - a single run already takes a number of hours with larger models, and that i typically conduct a minimum of two runs to make sure consistency.

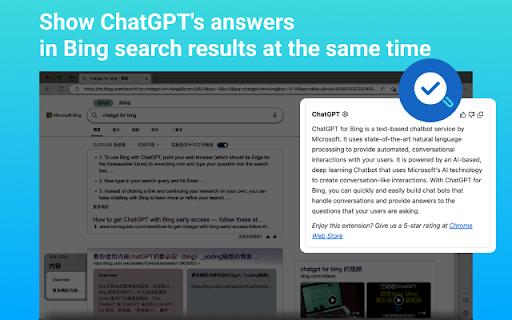

"There are 191 straightforward, 114 medium, and 28 troublesome puzzles, with harder puzzles requiring extra detailed image recognition, extra advanced reasoning techniques, or both," they write. This complete strategy delivers a extra accurate and nuanced understanding of each model's true capabilities. It's designed to evaluate a model's potential to grasp and ديب سيك apply information throughout a variety of subjects, offering a strong measure of basic intelligence. Instruction tuning: To improve the efficiency of the model, they acquire around 1.5 million instruction knowledge conversations for supervised high quality-tuning, "covering a wide range of helpfulness and harmlessness topics". ChatGPT has a personality restrict as properly but doesn’t at the moment have a limit on conversations you possibly can have per day. This eval version launched stricter and more detailed scoring by counting protection objects of executed code to assess how nicely fashions perceive logic. Not mirrored within the test is the way it feels when using it - like no other mannequin I do know of, it feels more like a multiple-alternative dialog than a normal chat. Using DeepSeek feels quite a bit like using ChatGPT. LLMs. Microsoft-backed OpenAI cultivated a new crop of reasoning chatbots with its ‘O’ series that were higher than ChatGPT. OpenAI offers Canvas , which lets customers work with ChatGPT responses like a reside doc, making it easier to make use of as a springboard for ideas.

This pragmatic determination is predicated on a number of components: First, I place explicit emphasis on responses from my traditional work surroundings, since I often use these models in this context throughout my every day work. The MMLU-Pro benchmark is a comprehensive evaluation of massive language models across varied classes, together with laptop science, mathematics, physics, chemistry, and extra. 10,000 if not more. However, considering it is primarily based on Qwen and the way nice each the QwQ 32B and Qwen 72B fashions carry out, I had hoped QVQ being both 72B and reasoning would have had far more of an influence on its general efficiency. A rival chatbot has shaken Google out of its routine, with the founders who left three years in the past re-partaking and more than 20 A.I. Who is Expanding Overseas? Now, confession time - when I was in college I had a few mates who would sit round doing cryptic crosswords for fun. With extra classes or runs, the testing duration would have become so long with the available assets that the tested models would have been outdated by the time the research was accomplished.

When you beloved this information and also you desire to get more info concerning شات DeepSeek generously check out the webpage.

- 이전글Why Live Poker Succeeds 25.02.11

- 다음글Five Killer Quora Answers To Modular Buildings Containers 25.02.11